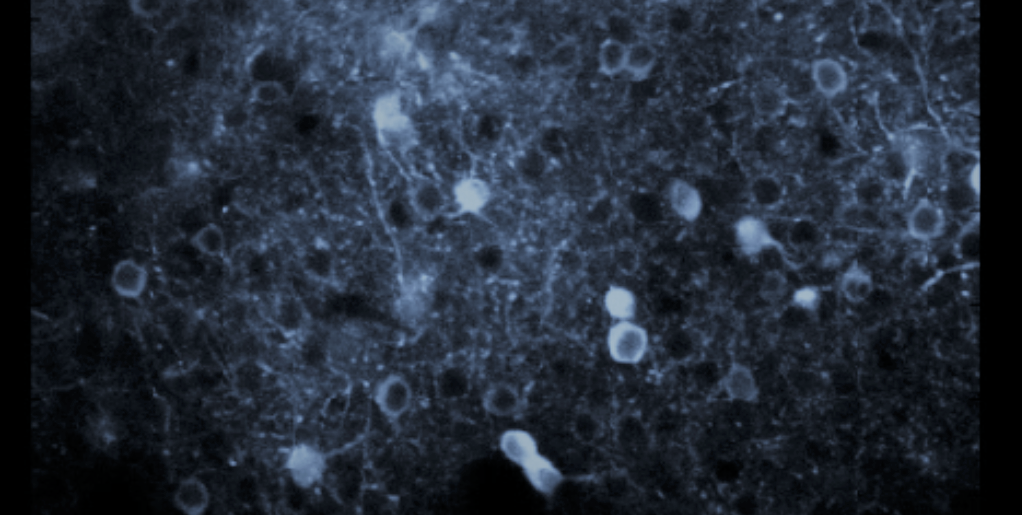

Neurons in the mouse posterior parietal cortex.

[Source: Picower Institute for Learning and Memory, July 3, 2018]

You see the flour in the pantry, so you reach for it. You see the traffic light change to green, so you step on the gas. While the link between seeing and then moving in response is simple and essential to everyday existence, neuroscientists haven’t been able to get beyond debating where the link is and how it’s made. But in a new study in Nature Communications, a team at MIT’s Picower Institute for Learning and Memory provides evidence that one crucial brain region called the posterior parietal cortex (PPC) plays an important role in converting vision into action.

“Vision in the service of action begins with the eyes, but then that information has to be transformed into motor commands,” said senior author Mriganka Sur, Paul E. and Lilah Newton Professor of Neuroscience in the Department of Brain and Cognitive Sciences. “This is the place where that planning begins.”

Sur said the study may help to explain a particular problem in some people who have suffered brain injuries or stroke, called “hemispatial neglect.” In such cases, people are not able to act upon or even perceive objects on one side of their visual field. Their eyes and bodies are fine, but the brain just doesn’t produce the notion that there is something there to trigger action. Some studies have implicated damage to the PPC in such cases.

In the new study, the research team pinpointed the exact role of the PPC in mice and showed that it contains a mix of neurons attuned to visual processing, decision-making and action.

“This makes the PPC an ideal conduit for flexible mapping of sensory signals onto motor actions,” said Gerald Pho, a former graduate student in the Sur lab who is now at Harvard University. Pho is co-lead author with Michael Goard, a former MIT postdoctoral fellow now at UC Santa Barbara.

Mouse see, mouse do

To do the research, the team trained mice on a simple task: if they saw a striped pattern on the screen drift upward, they could lick a nozzle for a liquid reward but if they saw the stripes moving to the side, they should not lick, lest they get a bitter liquid instead. In some cases they would be exposed to the same visual patterns, but the nozzle wouldn’t emerge. In this way, the researchers could compare the neurons’ responses to the visual pattern with or without the potential for motor action.

As the mice were viewing the visual patterns and making decisions whether to lick, the researchers were recording the activity of hundreds of neurons in each of two regions of their brain: the visual cortex, which processes sight, and the PPC, which receives input from the visual cortex, but also input from many other sensory and motor regions. The cells in each region were engineered to glow more brightly when they became active, giving the scientists a clear indication of exactly when they became engaged by the task.

Visual cortex neurons, as expected, principally lit up when the pattern appeared and moved, though they were split about evenly between responding to one visual pattern or the other.

Neurons in the PPC showed more varied responses. Some acted like the visual cortex neurons but most (about 70 percent) were active based on whether the pattern was moving the right way for licking (upward) and only if the nozzle was available. In other words, most PPC neurons were selectively responsive not merely to seeing something, but to the rules of the task and the opportunity to act on the correct visual cue.